Contents

ADetailer

ADetailer is a extension for stable diffusion webui, similar to Detection Detailer, except it uses ultralytics instead of the mmdet.

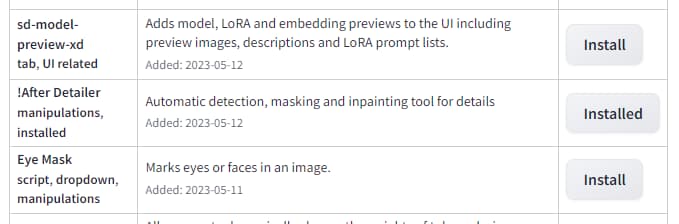

Install

(from Mikubill/sd-webui-controlnet)

- Open "Extensions" tab.

- Open "Install from URL" tab in the tab.

- Enter

https://github.com/Bing-su/adetailer.gitto "URL for extension's git repository". - Press "Install" button.

- Wait 5 seconds, and you will see the message "Installed into stable-diffusion-webui\extensions\adetailer. Use Installed tab to restart".

- Go to "Installed" tab, click "Check for updates", and then click "Apply and restart UI". (The next time you can also use this method to update extensions.)

- Completely restart A1111 webui including your terminal. (If you do not know what is a "terminal", you can reboot your computer: turn your computer off and turn it on again.)

You can now install it directly from the Extensions tab.

You DON'T need to download any model from huggingface.

Options

| Model, Prompts | ||

|---|---|---|

| ADetailer model | Determine what to detect. | None = disable |

| ADetailer prompt, negative prompt | Prompts and negative prompts to apply | If left blank, it will use the same as the input. |

| Skip img2img | Skip img2img. In practice, this works by changing the step count of img2img to 1. | img2img only |

| Detection | ||

|---|---|---|

| Detection model confidence threshold | Only objects with a detection model confidence above this threshold are used for inpainting. | |

| Mask min/max ratio | Only use masks whose area is between those ratios for the area of the entire image. | |

| Mask only the top k largest | Only use the k objects with the largest area of the bbox. | 0 to disable |

If you want to exclude objects in the background, try setting the min ratio to around 0.01.

| Mask Preprocessing | ||

|---|---|---|

| Mask x, y offset | Moves the mask horizontally and vertically by | |

| Mask erosion (-) / dilation (+) | Enlarge or reduce the detected mask. | opencv example |

| Mask merge mode | None: Inpaint each maskMerge: Merge all masks and inpaintMerge and Invert: Merge all masks and Invert, then inpaint |

Applied in this order: x, y offset → erosion/dilation → merge/invert.

Inpainting

Each option corresponds to a corresponding option on the inpaint tab. Therefore, please refer to the inpaint tab for usage details on how to use each option.

ControlNet Inpainting

You can use the ControlNet extension if you have ControlNet installed and ControlNet models.

Support inpaint, scribble, lineart, openpose, tile controlnet models. Once you choose a model, the preprocessor is set automatically. It works separately from the model set by the Controlnet extension.

Advanced Options

API request example: wiki/API

ui-config.json entries: wiki/ui-config.json

[SEP], [SKIP] tokens: wiki/Advanced

Media

- 🎥 どこよりも詳しいAfter Detailer (adetailer)の使い方① 【Stable Diffusion】

- 🎥 どこよりも詳しいAfter Detailer (adetailer)の使い方② 【Stable Diffusion】

Model

| Model | Target | mAP 50 | mAP 50-95 |

|---|---|---|---|

| face_yolov8n.pt | 2D / realistic face | 0.660 | 0.366 |

| face_yolov8s.pt | 2D / realistic face | 0.713 | 0.404 |

| hand_yolov8n.pt | 2D / realistic hand | 0.767 | 0.505 |

| person_yolov8n-seg.pt | 2D / realistic person | 0.782 (bbox) 0.761 (mask) |

0.555 (bbox) 0.460 (mask) |

| person_yolov8s-seg.pt | 2D / realistic person | 0.824 (bbox) 0.809 (mask) |

0.605 (bbox) 0.508 (mask) |

| mediapipe_face_full | realistic face | - | - |

| mediapipe_face_short | realistic face | - | - |

| mediapipe_face_mesh | realistic face | - | - |

The yolo models can be found on huggingface Bingsu/adetailer.

Additional Model

Put your ultralytics yolo model in webui/models/adetailer. The model name should end with .pt or .pth.

It must be a bbox detection or segment model and use all label.

How it works

ADetailer works in three simple steps.

- Create an image.

- Detect object with a detection model and create a mask image.

- Inpaint using the image from 1 and the mask from 2.